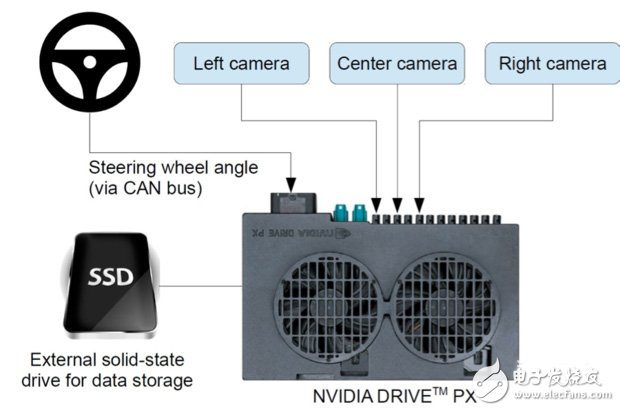

Automated driving technology has been a research hotspot in recent years. Tesla has developed a system that can achieve automatic driving on fewer vehicles. It is said to be available in about two years. In the past nine months, the Nvidia engineering team developed a self-driving car with a camera, a Drive-PX embedded computer, and 72 hours of training data. At arXiv.org (the world's largest preprint system) owned by the Cornell Research Library, Nvidia published the results of the DAVE2 study in the form of an academic preprint entitled "End-to-End Learning of Self-Drive Vehicles." Nvidia's project name is DAVE2, why is it named? Because the Defense Advanced Research Projects Agency has a project called DARPA Autonomous Vehicle (DAVE, both DARPA driverless cars). Although neural networks and self-driving cars seem to be a newly invented technology, Google's Geoffrey Hinton, Facebook's Yann Lecune, and University of Montreal's Yoshua Bengio have been working together for the past 20 years. These technologies are AI's. a branch. The DARPA DAVE project applied to study the neural network autonomous vehicle, its predecessor was the ALVINN project, which was proposed by Carnegie Mellon in 1989. One thing has changed: research has become economically viable due to the development of GPUs. Neural networks, image recognition applications (such as driverless cars) have recently experienced major outbreaks, for two main reasons. First, the GPU that renders images on the phone is already very powerful and cheap. Loading the GPU on a board-level supercomputer can solve the huge parallel neural network problem, and its price is cheap enough. Every AI researcher and software developer can afford it. Second, large, tabbed image datasets already exist, and we can use it to train large parallel neural networks that are executed by the GPU to see and perceive objects captured by the camera. Drawing human driving patterns The Nvidia team trained a convolutional neural network that draws the original pixels captured by a single front camera, which is directly linked to the driving commands. The breakthrough of Nvidia technology is that driverless cars can automatically self-learn and learn by watching human driving. Although the operating system uses a camera and a Drive-PX embedded computer, the training system uses three cameras and two computers that can capture 3D video images and steering angles (from human-powered cars). This information can train the system to learn to "see" and "drive" capabilities. Nvidia monitors the change in steering angle, uses it as a training signal, and maps the human driving mode to the bitmap image recorded by the camera. The system uses a convolutional neural network to characterize the internal characterization of the driving process, such as detecting useful road features—lines, cars, and road profiles. Automated driving cars sense road conditions, other cars and obstacles, and the open source machine learning system Torch 7 takes an in-depth look at the process and then manipulates the test car. The actual training is performed at a rate of 10 frames per second, because even if the speed reaches 30 frames, the difference will not be too great, and the value of learning will not increase. The test car includes a 2016 Lincoln MKZ and a 2013 Ford Focus. At the heart of machine learning processing is the use of convolutional neural networks built by Torch 7 to simulate manipulation. The human-powered car will take some 10fps images, and the convolutional neural network will issue manipulation commands to simulate the 10fps image. The researchers need to compare the simulated response with the human steering angle. One is the human steering angle, and the other is the manipulation command of the convolutional neural network simulation, which can teach the system the ability to “see†and “manipulate†the researchers. The researchers need to compare the two and analyze the differences. The car will travel for 3 hours on the test route, the total mileage is equivalent to 100 miles, the trip will be recorded as a video, and the test data used in the simulation will come from the video. Highway test When the convolutional neural network simulates driving well, the test vehicle can be further machined and tested on the road. Road testing can improve the system, and the driver must supervise the driverless car throughout the process, and promptly intervene when the system goes wrong. Each time it is corrected, the results are entered into the machine learning system to improve the accuracy of the manipulation process. During the driving of Turnpike in New Jersey, the first 10 miles of the car were fully automated. In the early tests, 98% of the operations were unmanned. Nvidia demonstrates that convolutional neural networks can learn the following tasks: road detection, non-manual road following, clear decomposition and classification of road and road identification, semantic abstraction, route planning, and control. Let the car travel in different weather, lighting conditions, highways or bypasses, get internal processing, form less than 100 hours of training data, and then use Torch 7 to render the data, the system can learn the above operation. From the report, Nvidia hinted that the system is not ready for production. It wrote in the report: "If we want to improve the stability of the network, find a good way to verify this stability, and improve the visibility of the internal processing of the network, we also There is more work to be done."

With the popularity of LED outdoor screen, it is more and more used for lighting and store layout, becoming a beautiful scenery line in modern metropolis.

SMD Outdoor Fixed LED Screen features:

1. Strong visual impact, dynamic picture and sound, all-round let the audience feel, effectively transmit advertising information to guide consumption. In the face of overwhelming advertising, memory limitations and unlimited information dissemination, the use of Led Display has become a scarce resource.

2. According to the shape of the wall, it can be straight or curved. Shape diversity creates more personalization and strong visual impact;

3. The credibility of the audience is high. Compared with the rejection and distrust of the advertising flyers artificially distributed on the street, the application of LED display makes the audience more active and passive. It can avoid the help barriers caused by the conscious active avoidance of the advertising audience, and greatly improve the credibility and promotion of the Led Screen advertising content.

4. Accurate advertising, the original extensive advertising effect is not good, resulting in waste, so it is more product content to meet the needs of the masses, hot news, hot TV and movies can be used for reference, to control the market heat and laws freely.

Product structure: Outdoor Led Display mainly consists of screen body, control system, heat dissipation system and structure. The control mode includes remote control and field control, timing switch, etc.

SMD Outdoor Fixed LED Screen Waterproof Led Wall Screen,Outdoor Fixed Install Led Screen,Smd Outdoor Fixed Advertising Led Display,Outdoor High Definition Smd Led Display Shenzhen Vision Display Technology Co,.LTD , https://www.ledvdi.com