(Original title: AI chip giant hegemony era: Google, NVIDIA, Intel, Huawei AI chip will debut next week)

Zhang Shaohua

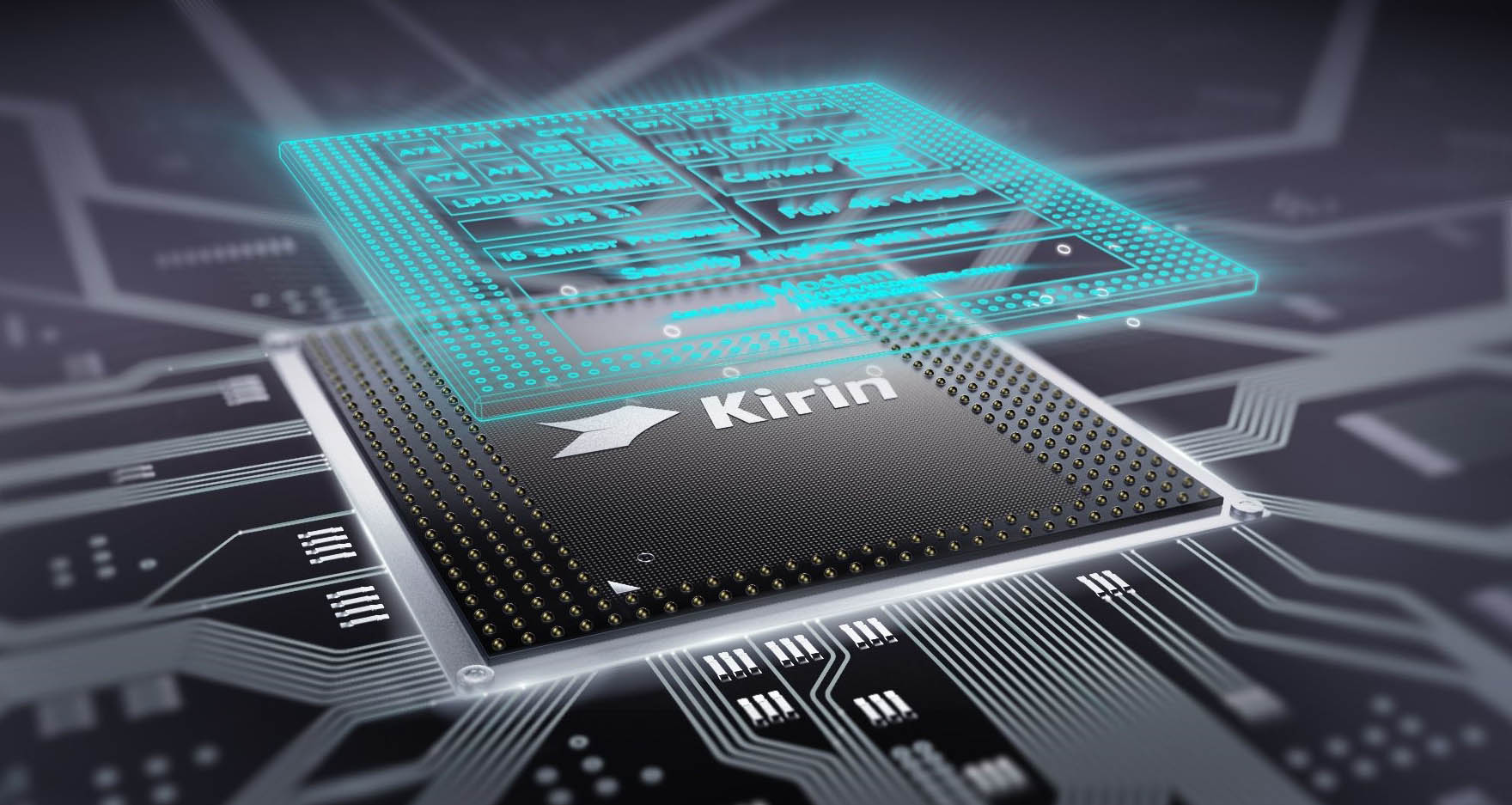

Today, Yu Chengdong, Huawei's senior vice president, released a video on Weibo to create momentum for her own artificial intelligence AI chip. He said that "the pursuit of speed never ends with imagination" and predicts that the AI ​​chip will debut on September 2 at IFA 2017.

At last month’s Huawei’s mid-year performance media communication meeting, Yu Chengdong revealed that it will release AI chips this fall, and Huawei will also be the first company to introduce artificial intelligence processors in smartphones. In addition, at the 2017 China Internet Conference, Yu Chengdong also stated that the chips produced by Huawei Hass will integrate CPU, GPU and AI functions, and may be based on the new AI chip design introduced by ARM at Computex this year. .

According to Yu Chengdong's video today, Huawei's AI processor is expected to significantly improve the data processing speed of the Kirin 970. If the AI ​​chip can be used on the Huawei Mate 10 phone released in October, the data processing capability of Huawei Mate 10 will be very promising.

Like Huawei, current global technology giants such as Intel, Lenovo, Nvidia, Google, and Microsoft are all actively embracing AI, and the layout of AI chips has become a top priority.

Intel

Regarding the importance of AI chips, Song Jiqiang, director of the Intel China Research Institute, said in an interview with Media Xinzhiyuan this month that we need to use technology to process large amounts of data to generate value for customers. In this process, the chip is undoubtedly extremely important. of:

By 2020, it is conservatively estimated that 50 billion devices will be interconnected worldwide. Future data comes from various device terminals. No longer rely on our people to call, play mobile phones, send e-mail these data. Unmanned vehicles, smart homes, cameras, etc. are all generating data.

After each driverless car is a server, each car will have more than 4,000 gigabytes of data per day, and these data will not be transmitted through 5G. Therefore, a lot of data must be processed and analyzed locally and then selectively. Going up, local you will use a lot of technology, beyond the modern server technology.

As a traditional chip faucet maker, Intel launched a new generation of Xeon server chips in July this year. Its performance has increased dramatically, and its deep learning ability is 2.2 times that of the previous generation of servers. It can accept training and reasoning tasks. In addition, Intel also demonstrated Field Programmable Gate Array (FPGA) technology that will play a major role in the future of AI. At the same time, it plans to launch the Lake Crest processor, which aims to learn deeper code.

Lenovo

According to Yang Yuanqing, president of Lenovo Group, “AI general-purpose processor chip is the strategic commanding height in the era of artificial intelligenceâ€, said He Zhiqiang, senior vice president of Lenovo Group and president of Lenovo Venture Capital Group.

In the era of smart Internet, AI chips are the artificial intelligence engine and will play a decisive role in the development of intelligent Internet.

Just last week, Lenovo Capital Partners and top investors such as Alibaba Ventures jointly invested in the Cambrian technology known as the "world's first unicorn chip community's first unicorn."

Nvidia

In the past few years, Nvidia has shifted its business focus to AI and deep learning. In May of this year, Nvidia released a heavyweight processor for artificial intelligence applications: the Tesla V100.

The chip has 21 billion transistors and is much more powerful than the Pascal processor with 15 billion transistors released by Nvidia a year ago. Although it is only as large as the surface of the Apple Watch smart watch, it has 5120 CUDA (Statistical Computing Device Architecture) processing cores and double-precision floating-point performance of 7.5 trillion times per second. Nvidia CEO Huang Renxun said that Nvidia spent 3 billion US dollars to build this chip, the price will be 149,000 US dollars.

Google, which announced its strategic shift to "AI first," released last year a TPU (Tensile Processing Unit) tailored specifically for machine learning. Compared with CPUs and GPUs, TPU efficiency has increased by 15-30 times, and energy consumption has decreased by 30. -80 times.

At the Google Developers Conference in May this year, Google announced a new product, the Cloud TPU, which has four processing chips that can complete 180 tflops of computing tasks per second. Connecting 64 Cloud TPUs to each other to form a supercomputer Google calls Pod, Pod will have a computing power of 11.5 petaflops (1 petaflops for 1015 floating-point operations per second) - this will be the research in the AI ​​field. It is a very important basic tool.

Currently, TPU has been deployed to almost all of Google's products, including Google search, Google Assistant, even in the go battle between AlphaGo and Li Shishi, TPU also played a key role.

Microsoft

Last month, media reports said that Microsoft will add an independently designed AI coprocessor for the next-generation HoloLens, which can analyze the content of what the user sees and hears on the device, without wasting time transferring data. Cloud processing. This AI chip is currently under development and will be included in the next generation HoloLens Holographic Processing Unit (HPU). Microsoft said that the AI ​​coprocessor will be Microsoft's first chip designed for mobile devices.

In recent years, Microsoft has been working hard to develop its own AI chip: it has developed an action tracking processor for the Xbox Kinect game system; in order to compete with Google and Amazon in the cloud service, Microsoft has specially customized a field programmable program. Gate array (FPGA). In addition, Microsoft also purchased programmable chips from Altera, a subsidiary of Intel, to write custom software to meet demand.

Last year, Microsoft used thousands of AI chips at a conference to translate all English Wikipedias into Spanish, with about 5 million articles, and the translation time was less than 0.1 second. Next, Microsoft hopes to make it possible for customers using the Microsoft cloud to perform tasks through AI chips, such as identifying images from massive data, or predicting consumers' purchasing models through machine learning algorithms.

Our Professional 10W solar panel manufacturer is located in China. including Solar Module. PV Solar Module, Silicon PV Solar Module, 10W solar panel for global market.

10W solar panel, PV solar panel, Silicon PV solar module

Jiangxi Huayang New Energy Co.,Ltd , https://www.huayangenergy.com