Abstract: Based on the research of vehicle license plate recognition system, the rough classification algorithm of vehicle license plate based on gray-domain binarization and the vehicle license plate segmentation algorithm and the weight recognition feature of character recognition algorithm are adopted. Experiment, designed an effective license plate recognition system.

Key words: rough classification algorithm; character segmentation; character recognition

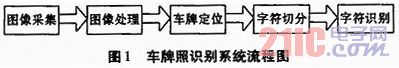

0 Introduction The license plate system is mainly divided into image acquisition, image processing, license plate location, character segmentation, and character recognition.

This article refers to the address: http://

Image acquisition: At present, image acquisition mainly uses a dedicated camera to connect an image acquisition card or directly connect to a portable notebook for real-time image acquisition, and converts the analog signal into a digital signal.

Image processing: The captured image needs to be enhanced, restored, transformed, etc., in order to highlight the main features of the license plate in order to better extract the license plate area.

License plate positioning: From the perspective of human eye vision, according to the characteristics of the character target area of ​​the license plate, the corresponding features are extracted on the basis of the binarized image. The division of the vehicle license plate is a process of finding the area that best fits the license plate. In essence, it is a problem of finding the optimal positioning parameters in the parameter space, which needs to be implemented by the optimization method.

Character segmentation: A process of segmenting a single character (including Chinese characters, letters, and numbers) from the obtained license plate area to facilitate character recognition. Considering that the characters on the license plate are generally letters and numbers except one Chinese character, that is, in an ideal state, each character is fully connected and disconnected from each other, so a specific method can be used for character segmentation.

Character recognition: It is the process of converting the characters obtained by segmentation into text and storing them in the database or directly displaying them.

1 Image Preprocessing The image preprocessing method used is to pass the acquired color image through color-gray transformation and grayscale stretching to obtain a 256-level grayscale image highlighted in the license plate area.

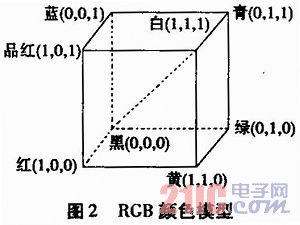

1.1 RGB Color Model The RGB color model is commonly used in color raster graphic display devices such as color cathode ray tubes. It is the most used and most familiar color model. It adopts a three-dimensional Cartesian coordinate system, with red, green and blue as the three primary colors, and the respective primary colors are mixed together to produce a composite color.

The RGB color model is usually represented by the unit cube shown in Fig. 2. On the main diagonal of the cube, the intensity of each primary color is equal, resulting in a dark to bright white, that is, different gray values. (0,0,0) is black, and (1,1,1) is white. The other six corners of the cube are red, yellow, green, cyan, blue, and magenta. It should be noted that the color field covered by the RGB color model depends on the color characteristics of the fluorescent dots of the display device and is related to the hardware. of.

1.2 Converting a color image into a grayscale image Convert a color image into a grayscale image for further processing. Use the formula to convert the color image into a grayscale image: p=0.114*R+0.587*G+0.299*B, where P represents the gray value of a point in the image, and R, G, and B represent The value of the R, G, and B components in the RGB model of the corresponding point in the color map. Fig. 3 is an effect diagram of converting a color image into a grayscale image.

1.3 Gray Image Enhancement The main method of grayscale stretching is to linearly transform the gray value of the pixel in the image within a certain grayscale transformation interval to achieve a certain grayscale interval image and enhance contrast. the goal of. The general gray-scale variation equation is: D=AX+B, where D is the gray-scale transformed gray matrix, X is the pre-transform gray matrix, and A and B are the transform equation coefficients.

Gray-scale stretching refers to selectively stretching a certain gray-scale interval according to the distribution of the gray value square map to improve the output image. If an image is concentrated in a darker area and the image is darker, you can use the grayscale stretching function (slope A>I) to stretch the grayscale of the object to improve the image; also if the grayscale of the image is concentrated The bright area causes the image to be bright, and the grayscale stretching function (slope A)

The description of the algorithm is as follows:

Input: Grayscale image Output: Threshold k

algorithm:

(1) Find the maximum gray level max in the image;

(2) Let k=0;

(3) respectively determining the total number of the two types of pixels larger than and smaller than k and the gray average value of the pixels;

(4) Calculate the variance between classes And intraclass variance

;

(5) k=k+1, loops (3) to (5), until k>max;

(6) find out The largest value, the corresponding threshold k is obtained;

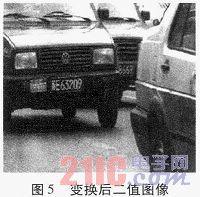

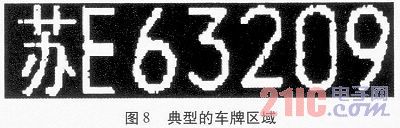

With this algorithm, dynamic binarization can be performed according to the global grayscale image. The binarized image is shown in Fig. 5. The license plate area is well highlighted, and other interference information is well suppressed.

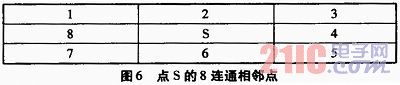

2 Rough positioning of the license plate area An image consists of several connected domains, which are the areas formed by adjacent points. Point S is defined as point 1, 2, 3, 4, 5, 6, 7, 8 in the image. The adjacent points connected by 4 are defined as points 2, 4, 6, and 8 (see Figure 6). 8 connectivity better reflects the adjacency with respect to 4 connectivity, so this paper uses the 8-connected domain of the computed image.

In this paper, the method of depth-first search for adjacent points of each point is used to calculate the connected region of the whole image. The specific algorithm is as follows:

Input: Binary image matrix Output: Connected area matrix (each black pixel marks the label of the connected area)

algorithm:

(1) reading in the binary image matrix, so that the connected area number k=0;

(2) scanning a pixel marked as unread;

(3) sequentially scan the eight adjacent points of the point, compare the values, and if they are equal, push them onto the stack and mark them as k;

(4) Pop the first pixel at the top of the stack and return to step (3) until the stack is empty;

(5) Select the next pixel marked as unread, k++;

(6) Go to step (2) until all pixels have been scanned.

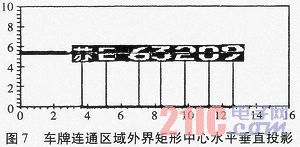

The vehicle license plate image is composed of a plurality of characters of similar size, and the connected domain of the license plate area is composed of a plurality of characters or a part of the connected area of ​​the character and the connected domain of the license plate frame and the connected domain of the license plate background. . Under normal circumstances, basically every character will form a circumscribed rectangle of a connected area of ​​substantially similar size, as shown in FIG.

2.1 Feature Extraction of the Vehicle License Plate Area After obtaining the candidate areas of several vehicle license plates, it is necessary to perform feature extraction on these candidate areas to perform classification and identification as a license plate. Since the sizes of these regions are inconsistent, firstly, these images of different sizes are normalized, normalized to the same size image, and then the horizontal crossing features and vertical projection features of these images are extracted, because the dimension of the features is relatively high. , increase the computational burden and storage capacity, and also use the K-L transform to compress the dimension of the feature.

In order to further determine the license plate area and determine the precise position of the license plate, it is necessary to extract features for the license plate candidate area. Common license plate features include texture features, horizontal crossing features, and vertical projection features. This paper uses the horizontal traversal and vertical projection features of the extracted candidate regions to identify them.

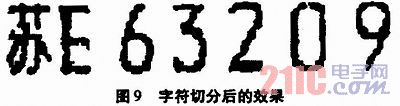

3 The character segmentation algorithm for vehicle license plates first binarizes the image, calculates the pixel projection in the horizontal and vertical directions, and uses the projection method to determine the coordinates of the top and bottom points of the license plate characters and the positions of the leftmost and rightmost characters. The height and width of the entire license plate are obtained, thereby obtaining the height and width of a single character, then calculating the connected domain of the license plate area, extracting the connected domain between the leftmost and rightmost character positions, and finally making the larger according to the estimated character size. The connected domain is then segmented to obtain the final segmentation result. The specific algorithm is as follows:

Input: image binarization matrix;

Output: Characters are divided into rectangular position coordinates.

algorithm:

(1) Calculate the number of all pixel points of each row as 0, that is, horizontal projection;

(2) looking for the first local minimum point of the horizontal projection from top to bottom as the upper bound of the image;

(3) looking for the first local minimum point of the horizontal projection from bottom to top as the lower bound of the image;

(4) Calculate the number of all pixel points in each column between the upper and lower bounds, that is, the vertical projection;

(5) Find the first local minimum point from left to right as the left boundary of the segmentation image;

(6) Find the first local minimum point from right to left as the right boundary of the segmentation image;

The width of the entire segmentation area width=right boundary abscissa, left boundary abscissa;

Height of the entire segmentation area Height=upper boundary coordinates, lower boundary coordinates;

Single character width w = split area width / 8;

(7) Calculate the 8-connected domain between the upper and lower boundaries and the left and right boundaries;

(8) determining whether the width of each connected domain is greater than 1.2*w;

No: Go to (10);

Yes: Go to (9);

(9) Calculate the minimum point of the vertical projection of the connected domain with each connected domain width greater than 1.2*w, and divide the connected domain into two parts with the minimum point; go to (8);

(10) output all the coordinates of the segmentation area;

(11) The algorithm ends.

Figure 9 shows the result of the license plate segmentation.

4 Vehicle license plate recognition character recognition is the last component of the license plate recognition system. This part needs to identify and process the results obtained by image acquisition, image processing, license plate location and character segmentation, and finally obtain the characters of the vehicle license plate. . The character recognition method of the vehicle license plate has many similarities with the ordinary OCR character recognition, and is usually a method of directly utilizing or borrowing OCR character recognition, and can obtain a good recognition effect.

Vehicle license plate character recognition belongs to a branch of the pattern recognition field, and adopts the classic theory and method of pattern recognition. The usual pattern recognition process can be summarized as: mapping from the measurement space to the feature space and then to the pattern space. For the general character recognition process, the recognition process is: extracting a feature describing the character from the input character pattern of the character to be recognized (sample), and then determining the mode category to which the sample belongs according to a certain criterion. Therefore, character description, feature extraction and selection, and classification judgment are the three basic steps of character recognition.

5 Summary The vehicle license plate recognition system is an important technology in the intelligent traffic management system and plays a key role in the automated management of vehicles. The vehicle license plate recognition system based on driving has become more important and more widely used as an important part of the intelligent traffic management system because of its adaptability and high degree of automation.

Coupletech Co., Ltd. also supply Opto-mechanics products including optical mechanical adjustments racks, Pockels Cell holder ( Q-Switch holder), water-cooled modules ( water-cooled holder ), air-cooled modules ( air-cooled holder ), Crystal Mount, Mirror Mount ( Optical Mount ), ploarizer holder and other optics-related products but also according to the customer's need to design and assemble the relevant systems.

For matching our EO Q-Switch, EO modulator, Optical Crystal and mirror, we offer all kinds of Kinematic Mounts, e.g. one dimensional, two dimensional, three dimensional and four dimensional adjusting mount, post, rails, brackets, bases, carries and clamps. Cooling module is suitable for higher power pulsed Q-Switched laser. Coupletech is constantly adding new opto-mechanics to better serve our customers.

Opto-mechanics

Optical Stages,Optical Mount,Optical Rails,Pockels Cell Positioner,Crystal Holder,Rotation Kinematic Mounts

Coupletech Co., Ltd. , https://www.coupletech.com