1 Introduction Mobile robot is an important branch of robotics, and with the rapid development of related technologies, it is developing in the direction of intelligence and diversification, and it is widely used and penetrates almost all fields. Yu Chun and Lidar use the method of detecting the road boundary, the effect is better, but when the interference signal is strong, it will affect the detection effect. Fu Mengyin and other navigation methods that use the baseboard as a reference target can improve the real-time performance of visual navigation. Here, the visual navigation method is adopted, and the robot realizes road tracking, stopping the target point, and guiding the guide in the environment based on the structured road, and achieves better results. 2. Introduction to navigation robot Guided robots are used in large exhibition halls, museums or other convention centers to guide visitors along a fixed route, to explain to visitors and to conduct simple conversations. Therefore, the navigation robot must have functions such as autonomous navigation, path planning, intelligent obstacle avoidance, docking and positioning of target points, voice explanation, and simple dialogue with visitors, and has the ability to quickly respond to and adapt to the external environment. Based on the hierarchical structure, the navigation robot can be divided into: artificial intelligence layer, control coordination layer and motion execution layer. The artificial intelligence layer mainly uses the CCD camera to plan and the path of the autonomous navigation robot. The control layer coordinates the fusion of the multi-sensor information, and the motion execution layer completes the robot walking. Figure 1 is a block diagram of the overall structure of the intelligent navigation robot. 3, navigation robot hardware design 3.1 artificial intelligence layer hardware implementation Considering that the mobile robot control system requires fast processing speed, convenient peripheral equipment expansion, small size and small quality, the host computer selects the PC104 system, and its software is programmed in C language. The USB camera is used to collect the visual information in front of the robot, which provides a basis for robot visual navigation and path planning. The peripheral microphone and speaker, when the robot reaches the target point, conduct a navigational explanation. 3.1.1 Hardware implementation of control coordination layer The selection of the robot sensor should depend on the working needs and application characteristics of the robot. Ultrasonic sensors, infrared sensors, electronic compasses and gyroscopes are used here to collect information about the surrounding environment of the robot, and to assist the robot in obstacle avoidance and path planning. Using the ARM processing platform, the motor is driven by the RS-485 bus to drive the robot to walk. The navigation robot requires a slightly higher accuracy, good repeatability, strong anti-interference ability, high stability and reliability. The robot must be able to accurately obtain its position information during the travel process. The digital compass reliably outputs the heading angle, and the gyroscope measures the offset and makes necessary corrections to ensure that the direction in which the robot walks does not deviate. The method of combining the Ultrasonic Sensor and the infrared sensor is used to acquire the information of the obstacle in front. The system is designed with 6 ultrasonic sensors and 6 infrared sensors. Among them, one in front and one in the back, and the other four ultrasonic sensors are located on the front side and the back side, respectively, with an angle of 45°, and the infrared sensors are respectively installed at 1 to 2 cm directly above the ultrasonic sensor. The ultrasonic sensor mainly realizes obstacle avoidance by ranging, and the infrared sensor is mainly used for compensating the blind spot of the ultrasonic sensor to determine whether there is an obstacle at a close distance. 3.1.2 Hardware implementation of the motion execution layer The actuator of the intelligent navigation robot uses a DC servo motor . Here, Sanyo Electric Super_L (24V/3.7A) has a rated output power of 60W, a maximum no-load speed of 3000r/rain, and a 500-line optical encoder to enable the robot to complete the corresponding action. The navigation robot uses closed-loop control to measure the actual value of the wheel speed through the optical encoder and feed it back to the microcontroller . Based on the difference between the actual speed and the given speed, the driver adjusts the corresponding voltage according to a certain calculation method (such as the PID algorithm), and repeats until a given speed is reached. The speed of the robot is realized by FAULHABER's MCDC2805. It achieves speed synchronization while minimizing torque ripple and the built-in PI regulator accurately reaches the specified position. When equipped with a Super_L motor and integrated encoder, the positioning control accuracy of 0.180 can be achieved even at very low speeds. 3.2 navigation robot software design The visual information in front of the navigation robot is captured by a USB camera or other camera, and the video is processed by an image processing algorithm to enable the robot to perform path planning and autonomous navigation. By receiving the multi-sensor fusion information of the lower layer, it is possible to achieve obstacle avoidance at a close distance, and therefore an alarm is issued when an obstacle is encountered. After reaching the target point, you can voice commentary, and after the explanation, you can have a simple conversation with the visitors. 4, visual navigation Visual navigation is a kind of navigation method for mobile robots, and the research of basic visual navigation is one of the main development directions of mobile robot navigation in the future. The function of the vision subsystem in the whole system is to capture the visual information of the surrounding environment by the camera for image understanding, and control the robot motion according to the image processing algorithm. The so-called "image understanding" is to obtain an understanding of the scene reflected by the image by processing the image data, including which objects are contained in the image and their positions in the image. The image contains a wealth of information, just extract useful information from it. Therefore, the image understanding algorithm is often formulated according to specific purposes, and has certain applicable conditions and limitations. 4.1 Image Preprocessing The original image is a structured road image made by the Logiteeh camera with a blue label inside the room. The pixel size is 320x240. The original image is first grayscale transformed and binarized by selecting an appropriate threshold. Then, the useful information of the image is extracted, and the direction of advancement is extracted by morphological expansion corrosion and the like. as shown in picture 2. Figure 3 shows the comparison of common edge operator detection effects. It can be seen from Fig. 3 that the Canny and Sobel operators are relatively better, and the Sobel operator has a smoothing effect on noise and can provide more accurate edge direction information. Here, the Sobel operator is used for detection, as shown in Figure 4. According to Fig. 4, the system detects the position of the two straight lines by hough transform, measures the pixel size of the two edge lines of the image from both ends, and then calibrates according to the actual ground distance to know the position of the robot. 4.2 template matching algorithm Template matching technology is an important research direction in image target recognition technology. It has the characteristics of simple algorithm, small amount of calculation and high recognition rate. It is widely used in the field of target recognition. It uses a smaller image to compare the template with the source image to determine if there is an area in the source image that is the same or similar to the template. If the area exists, its position can be determined and the area extracted. It often uses the squared error sum of the template and the corresponding region of the source image as a measure. Let f(x, y) be the source image of MxN, and g(s, t) be the template image of SxT (s ≤ M, T ≤ N), then the error squared measure is defined as: When A is a constant, then 2B can be matched. When D(x, y) takes the maximum value, the template is considered to match the image. It is usually assumed that A is a constant and an error occurs. In severe cases, it will not be matched correctly, so normalized cross-correlation can be used as the error squared measure, defined as: 4.3 template matching improved algorithm However, according to the template matching algorithm, the matching calculation workload is very large. Considering that the correlation is a specific form of convolution and the computational power of Matlab is strong, the FFT method can be used to calculate the inverse transform in the frequency domain. The image and the positioning template image are rotated by 180° Fourier transform and then subjected to a point multiplication operation, and then the inverse FFT transformation and returning the spatial domain value is equivalent to the correlation operation. After the maximum value of the spatial domain value is obtained, and the appropriate threshold is selected according to the maximum value, the position of the target point can be determined. After the template is successfully matched in the experiment, the target and background colors can be binarized and marked with a red "ten" character to continuously update the data information. Set the stop at the pixel position you want (such as the center of the image), then automatically adjust the robot position, designed as shown in Figure 5. It is known that the robot needs to travel to the right. 5. Experimental results and conclusions Based on the above design, experiments were carried out on robot motion control and path planning. The experiment uses Matlab language for image simulation, which can automatically select the appropriate threshold segmentation and obtain better edge detection. However, in the experiment, sometimes due to the influence of light intensity or other factors, the ideal effect can not be achieved when threshold segmentation is performed. The VC environment can control the robot movement, and the template matching achieves better results. The subsequent research will focus on the image processing method in the Visual C++ 6.0 environment. This allows for better control of robot movement. In summary, the system design allows the robot to accurately identify image information in complex and variable environments, and make the right decisions to achieve the desired actions to achieve the desired goals.

The Co-Al Co-doped Barium Titanate

Lead-free Piezoelectric Ceramics was successfully developed by Yuhai company

through repeated experiments. By Researching the influence of Co-Al Co-doping

on the structure and properties of Barium Titanate-based piezoelectric

ceramics, the formulation and preparation technology of Barium Titanate-based

piezoelectric ceramics were optimized. Yuhai`s BaTiO3 was prepared by conventional

solid-phase sintering method, with the piezoelectric constant d33 (>170pC/N),

dielectric loss tgδ≤0.5% and mechanical coupling coefficient Kp≥0.34.

Barium titanate lead-free piezoelectric

ceramics are important basic materials for the development of modern science

and technology, which was widely used in the manufacture of ultrasonic

transducers, underwater acoustic transducers, electroacoustic transducers,

ceramic filters, ceramic transformers, ceramic frequency discriminators, high

voltage generators, infrared detectors, surface acoustic wave devices,

electro-optic devices, ignition and detonation devices, and piezoelectric

gyroscope and so on.

Application: military, ocean, fishery, scientific research,

mine detection, daily life and other fields.

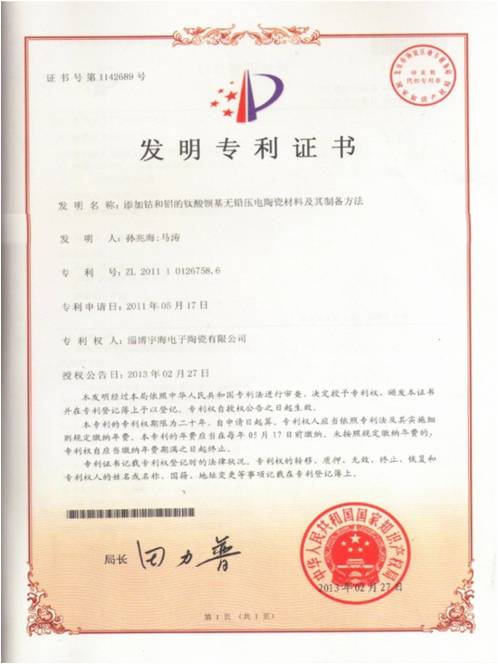

China Patent of Yuhai company`s BaTiO3

Chinese Patent No.: ZL 2011 1

0126758.6

Name: Lead-free Barium Titanate

Piezoelectric Material with Addition of Cobalt and Aluminum

Lead free piezo material BaTiO3

Lead-free Material

Properties

BaTiO3

Dielectric Constant

ɛTr3

1260

Coupling factor

KP

0.34

K31

0.196

K33

0.43

Kt

0.32

Piezoelectric coefficient

d31

10-12m/v

-60

d33

10-12m/v

160

g31

10-3vm/n

-5.4

g33

10-3vm/n

14.3

Frequency coefficients

Np

3180

N1

2280

N3

Nt

2675

Elastic compliance coefficient

Se11

10-12m2/n

8.4

Machanical quality factor

Qm

1200

Dielectric loss factor

Tg δ

%

0.5

Density

Ï

g/cm3

5.6

Curie Temperature

Tc

°C

115

Young's modulus

YE11

<109N/m3

119

Poison Ratio

0.33

Lead Free Piezoelectric Elements Piezo Element,Piezo Ceramic Elements,Piezoelectric Ceramic,Pzt Tubes Zibo Yuhai Electronic Ceramic Co., Ltd. , https://www.yhpiezo.com