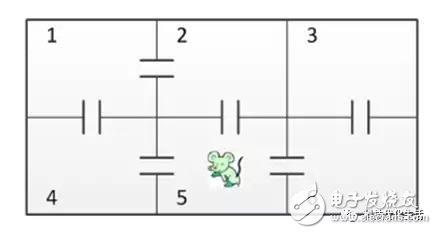

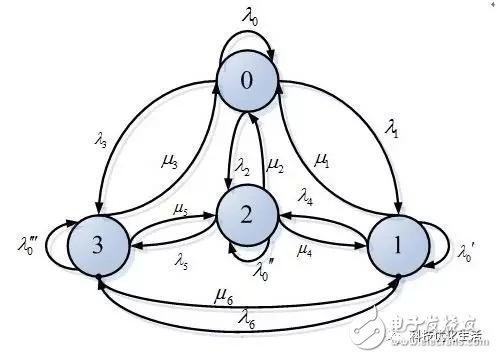

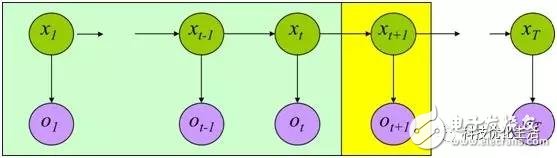

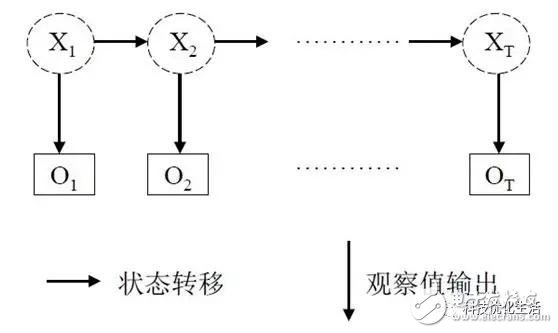

The "MM"-Markov model here is not related to the Chinese online proverb "Crush", but to the Russian "old driver" Markov. The full name of the "old driver" is André Andyevich Markov (Ðндрей Ðндреевич Марков), a Russian mathematician. In 1874, 18-year-old Markov was admitted to the University of St. Petersburg, where he studied with Chebyshev (another Russian "old driver", the famous Chebyshev theorem - the basis of probability theory and mathematical statistics), Ph.D. in Physics - Mathematics After staying at the school to teach, Professor of St. Petersburg University, Academician of the St. Petersburg Academy of Sciences. He has made great achievements in probability theory, number theory, function approximation theory and differential equation. Overview of the Markov model: Markov Model MM (MarkovModel) is a statistical model. Its original model Markov chain, proposed by the Russian mathematician Markov in 1906, generalized this to a countable infinite state space given by Kolmogorov in 1936. The Markov chain is closely related to the Markov process. The Markov process is an important method to study the state space of discrete event dynamic systems. Its mathematical basis is stochastic process theory. Markov nature: This property is called Markov Property and is also known as no aftereffect or no memory. If X(t) is a discrete random variable, the Markov property also satisfies the equation. Markov process: If the random process {X(t), t belongs to T}, it satisfies the Markov property and is called Markov process. For example, the jump of a frog in a lotus pond, the Brownian motion of particles in a liquid, the number of infected infectious diseases, the jump of a free electron in the electron nucleus in the electron nucleus, the process of population growth, the mouse in the labyrinth, etc. For the Markov process. Common Markov processes are: (1) The independent stochastic process is a Markov process. (2) The independent incremental process is a Markov process. (3) The Poisson process is a Markov process. (4) The Wiener process is a Markov process. (5) The particle random walk process is a Markov process. Unlike machine learning algorithms such as Naïve Bayes and Support Vector Machines, Markov processes do not have to label the processed data. The Markov process is more focused on dealing with control or decision making issues. The Markov process is used to predict the basic steps: first determine the state of the system, then determine the transition probability between states, then make predictions, and analyze the prediction results - if the results are reasonable, you can submit a prediction report, otherwise you need to check the system status and Whether the state transition probability is correct. Markov chain: Markov Chain is a discrete event stochastic process with Markov property in exponentials. In the process, given the current knowledge or information, the past is irrelevant for predicting the future. At each step of the Markov chain, the system can change from one state to another based on the probability distribution, or it can maintain the current state. The change in state is called transfer, and the probability associated with different state changes is called the transition probability. The Markov process with discrete time and state becomes the Markov chain MC. Markov chain principle: The Markov chain MC describes a sequence of states whose state values ​​depend on the previous limited state. A Markov chain is a sequence of random variables with Markov properties. The range of these variables, the set of all their possible values, is called the "state space" and the value is the state of time n. The Markov chain is closely related to the Markov process. Using Markov chains requires only recent or current dynamic data to predict the future. Markov chain properties: The Markov chain MC has the following properties: 1) Positive definiteness: Each element in the state transition matrix is ​​called state transition probability. It is known from the probability theory that each state transition probability is a positive number, which can be expressed by the formula: 2) finiteness: It is known from the knowledge of probability theory that each row in the state transition matrix of the state transition matrix is ​​incremented by one, which can be expressed by the formula: Markov sequence classifier: A sequence classifier or sequence labeler is a model that assigns a class or label to a unit in a sequence. Such as: word class annotation, speech recognition, sentence segmentation, phoneme phoneme conversion, local syntax analysis, block analysis, named entity recognition, information extraction are all sequence classification. The Markov sequence classifier is: 1) The explicit Markov model (VMM), also known as the Markov model MM. 2) Hidden Markov Model (HMM), describing a Markov process with implicit unknown parameters, is a double stochastic process (including Markov chains and general stochastic processes). Markov model application: Markov models are widely used in speech recognition, part-of-speech automatic labeling, phonetic conversion, probabilistic grammar and other natural language processing, arithmetic coding, geostatistics, enterprise product market forecasting, population process, bioinformatics (coding region or gene prediction). And other application areas. After long-term development, especially in speech recognition, it has become a universal statistical tool. 1) State statistical modeling: Markov chains are commonly used to model queuing theory and modeling in statistics. It can also be used as a signal model for entropy coding techniques and the like. Markov chain prediction is a scientific and effective dynamic prediction method for stochastic processes. The Markov chain has numerous biological applications, especially population processes, that can help model the modeling of biological population processes. 2) The Hidden Markov Model (HMM) is also used in bioinformatics to encode regions or gene predictions. In the second half of the 1980s, HMM began to be applied to the analysis of biological sequences, especially DNA. Since then, HMM has become an indispensable technology in the field of bioinformatics. So far, Hidden Markov Model (HMM) has been considered the most successful method to achieve fast and accurate speech recognition systems. Complex speech recognition problems can be expressed and solved very simply by implicit Markov models, which makes people sincerely lament the mathematical model. 3) Markov Chain Monte Carlo method: Markov chain Monte Carlo MCMC is a Monte Carlo method simulated by computer under the framework of naive Bayesian theory. The introduction of the Cove Chain (MC) into the Monte Carlo (MC) simulation to achieve dynamic simulations that change with the random simulation of the sampling distribution makes up for the shortcomings of the traditional Monte Carlo integrals that can only be statically simulated. A widely used statistical calculation method. Conclusion: The Markov Model is a statistical model. Its original model Markov chain was proposed by the Russian mathematician Markov in 1906. Using Markov chains requires only recent or current dynamic data to predict the future. The Markov chain is closely related to the Markov process. The Markov process is an important method to study the state space of discrete event dynamic systems. Its mathematical basis is stochastic process theory. The Markov model is widely used in fields such as natural language processing of artificial intelligence.

ZGAR PCC

ZGAR electronic cigarette uses high-tech R&D, food grade disposable pod device and high-quality raw material. All package designs are Original IP. Our designer team is from Hong Kong. We have very high requirements for product quality, flavors taste and packaging design. The E-liquid is imported, materials are food grade, and assembly plant is medical-grade dust-free workshops.

From production to packaging, the whole system of tracking, efficient and orderly process, achieving daily efficient output. We pay attention to the details of each process control. The first class dust-free production workshop has passed the GMP food and drug production standard certification, ensuring quality and safety. We choose the products with a traceability system, which can not only effectively track and trace all kinds of data, but also ensure good product quality.

We offer best price, high quality Vape Device, E-Cigarette Vape Pen, Disposable Device Vape,Vape Pen Atomizer, Electronic cigarette to all over the world.

Much Better Vaping Experience!

E-Cigarette Vape Pen,Disposable Device Vape,Vape Pen Atomizer,Latest Disposable E-Cigarette OEM vape pen,OEM electronic cigarette ZGAR INTERNATIONAL(HK)CO., LIMITED , https://www.zgarpods.com